While we still have two more weeks until we are presenting, the blogs are due tonight! So I will do my best to update on this week and give an idea of what we are doing going forward.

This Week

This week I focused on the drill-down functionality, I wanted to get as much of this done as I could before the final sprint. In the end, I got about 3/4th's of the work done. Throughout this week I have collaborated with Patrick quite a bit as our work was heavily crossing over. By the end of the week, we have our work pretty up to date on the merging side of things.

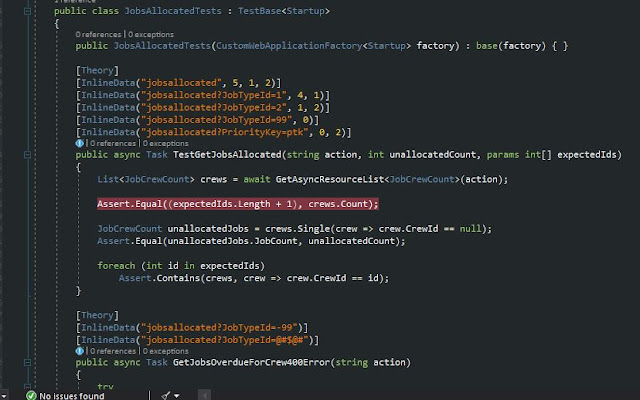

To get the 'ontimeratio' bar chart drill-down working I had to make some changes to Chris's Jobs endpoint in the dispatcher API. This change allows it to take an extra parameter that if given changes the database query to return only jobs that were late OR on time. It is super satisfying to see the drill-down working in action.

Sending a different URL request dependant on what element the user blicked on the bar chart.

I noticed I wasn't getting much sun even though it's so nice outside so this week I begun taking short 5-10 minute sun breaks when I felt like it. This also gave me time to clear my head or think about the current problem that I'm on which helps a lot in a developer environment I think. In my next job, I think I'll prioritize these mini-breaks a lot more.

Kayla left this week for greener pastures in a field she is more familiar with (medical), Kayla was the operations manager and the one that instigated the procurement of me and old mate Pat as project students in the first place. So we owe a lot to her for giving us this opportunity and I think we will miss having her around for the last couple of weeks we have at fieldGo. Now we are just a bunch of blokes haha.

Going Forward

The final sprint will have us focused on the presentability of the project due to us using it as a demo at our poster evening in a couple weeks, this will result in us working on outstanding graphical bugs as well as some crucial functionality bugs. It will also involve a wide range of testing to hopefully let us pick up on anything that might break the application during a demo. Our current dataset could also use more fleshing out, I think we would be wise to select a dataset that looks a little more realistic.

I still intend to put a little extra time in after the demo in order to clean up our work so that Chris doesn't take on a huge burden in order to implement it after we leave.

After work we had a meetup at a pub to see Kayla off, it was super enjoyable and we had some good laughs. I think I've been lucky to get this position and to be apart of this super cool team even if it was just for a short amount of time.

After work we had a meetup at a pub to see Kayla off, it was super enjoyable and we had some good laughs. I think I've been lucky to get this position and to be apart of this super cool team even if it was just for a short amount of time.

Thanks for reading and thank you to fieldGo for taking us on this semester. It's been great.